Broken State of Data

Friday, November 19, 2021

(Also see Don’t Chase Data Mesh, Yet.)

In recent years, data has gotten a more oversized seat at the enterprise table. Data was never less prominent, though the focus used to be more on choosing and running operational systems like SQL or NoSQL databases at scale. Given the prominence of closed-loop ML systems and the potential customer value they could generate from analytics (such as statistical, predictive, diagnostic, and machine learning) use cases, enterprises have an even stronger desire to be data-driven.

Just this week, I came across an insightful tweet by Adi Polak, a big data developer at Microsoft, who said, “If you can understand how to produce, collect, manage and analyze data, you’ll own your future.” She could not be more right. However, it turns out that being data-driven is more complicated than what appears on slideware.

Over the last few weeks, I have had a chance to speak with some ex-colleagues about the state of data, the emerging notion of data mesh, and how different vendors talk about data. For those not familiar with data mesh, see Zhamak Dehghani’s original articles How to Move Beyond a Monolithic Data Lake to a Distributed Data Mesh and Data Mesh Principles and Logical Architecture in 2019 and 2020, respectively. You can also find several videos on YouTube on this subject. As an outsider to this space, I have the luxury of a fresh perspective. Here is what I found out from my explorations.

The state of data is messy for several reasons, but these four seem to stand out.

- Fragmented ownership and accountability across organizational boundaries

- Centralization of specific functions like database management and data engineering but without a complete skin in the game

- Skill imbalance — software development teams rarely treat data as part of their service, and data engineers rarely get exposed to the software development lifecycle; they often speak and approach problems differently

- Glue tech that you need to shovel and transform data around to power analytics use cases, including machine learning

Often, the teams that produce data are decoupled from the downstream analytics and ML use cases. Likewise, the people dealing with analytics use cases are typically unaware of operational systems that produce the data. Data engineering teams get caught up in the middle with a mandate to make an enterprise data-driven. This chasm is not a surprise to the data practitioners who live through this mess.

On the one hand, such central data teams often lack the ownership and skills to implement this mandate. The same is valid on the operational side as well. When reviewing this article, Mammad Zadeh, who ran data engineering teams at LinkedIn and Intuit, rightly points out the lack of “right tools to empower developers to take on that responsibility.” When neither side is well-equipped, the enterprise is the loser.

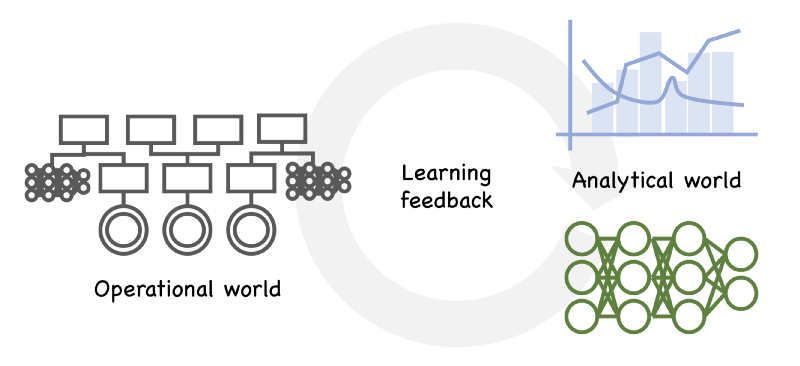

In a recent example, I saw an ML team wait for the data engineering team to fix things in areas they don’t own. A subset of data stopped flowing into a particular destination due to some defect in the source app, but the data engineering team ended up holding that bug in their queue for weeks. They had no idea where to look since they didn’t own those source apps. There is no wonder why data scientists often cite data-related issues like data collection, clean-up, and quality of data at the top of their list of pain points. Machine learning, after all, is a feedback loop problem. You need all parts of the feedback loop working well together for it to learn quickly and produce value. But for that to work well, you must find ways for the operational and analytical worlds to work well seamlessly.

While it is exciting to see the innovation and choice in the database arena, the resulting flexibility has amplified particular challenges. First, it is hard for software development teams to make database choices for the data model and anticipated access patterns. Second, once a team makes a choice, they have to deal with the consequences of that choice in the face of data or access pattern changes or lessons learned. Changes consume time, create downtime, and could leave dirty data behind. Third, choice breeds sprawl, making it complicated to trace the source of truth, the lineage, and establish which data to consume.

These challenges also contribute to increased cost and time for governance. Just knowing what data field exists in what data store could be a program over several weeks. It is not a happy place to be in a centralized data organization entrusted with solving problems like establishing the source of truth, lineage, model management, and governance.

The situation on the analytical side seems worse, with the work often dissolving to keep things going or “keep the lights on.” By this, I don’t mean that enterprises struggle to do analytics or machine learning. There are great products and technologies for these use cases for sure, but these involve stitching together multiple sources of data, performing data movement and transformations. However, in the current state of affairs, the success of the analytical world depends on first finding the suitable glue and then keeping that glue together end to end. When the glue breaks, things can go unnoticed for a while. When someone notices, many people may need to contribute to fixing it. The current state of affairs does not seem to offer a winning strategy.

In my opinionated observation, there is a lot of vendor-speak in the material I reviewed, which shows that the buyers for the products are not the doers but decision-makers keen to get some outcomes quickly. Such decision-makers may lack time to dive deep. I believe that tech designed and sold to decision-makers contributes to widening the chasm between operational and analytical worlds and adds more glue in between.

Also, look at the tool landscape, like Matt Turck’s 2021 Machine Learning, AI and Data (MAD) Landscape. It’s a tool bazaar. Making sense of the landscape can be challenging, and you may not know if you’re getting the value you want or just adding more tools to your tool chest. The tools in the landscape are growing, and you will undoubtedly need some handcrafted glue to put things together to drive value quickly. Building upon an analogy by Erik Bernhardsson, Benn Stancil describes this situation better in his The Modern Data Experience, where he says that “hyperspecialization (of tools) makes us great at chopping onions and baking apple tarts, but it’s a bad way to manage a restaurant.” In my experience, ML problems are like running a restaurant — you need to establish decent closed feedback to derive value.

Finally, many organizations seem to have left out data in their microservices and dev ops transformations of the prior decade. These transformations radically changed technology and culture through massive decentralization of infrastructure, people, and responsibilities. Yet, we still see large data engineering organizations struggling between producers and consumers of data, holding the problematic parts.

Centralization of certain functions isn’t bad as long there is a clear shared responsibility model. Consider, for example, centralized platform teams that provide CI/CD tools for cloud environments. There is a clear separation of concerns and a shared responsibility model with platform teams owning the tools and individual dev teams owning their apps and apps’ health. However, I don’t believe that the current toolset and patterns can enable a similar separation of concerns for data. Consequently, I don’t think it is fair for organizations to entrust data engineering teams to own discovery, governance, data cleanup, etc., in addition to the infrastructure and tools.

When reviewing a draft of this article, my one-time colleague and mentor, Debashis Saha, who has led data engineering teams at eBay and PayPal, made an excellent observation. He said that “today’s technology creates a polarized architecture” and that “centralized data teams cannot keep up with the needs of the business or the decentralized operational teams that create the data mess.” It’s just hard to succeed.

Where do these challenges leave us? I have a few hypotheses to offer.

First, I don’t believe that the analytical world would drastically improve unless we take the domain-driven approach to data very seriously in the operational world. Any change needs to start with data production, most of which usually happens in the operational world. We likely require a new generation of developer-facing abstractions for operational systems to better bridge with the analytical systems. I don’t have answers; I have a hunch.

Second, we need organizational transformations to implement “data as a product” with a bounded context. It requires shifting responsibilities. You can have centralized teams provide and operate shared tech, but you’ve to give the data ownership and accountability back to domain teams. You also have to hire appropriately and adjust goal setting and planning rituals to support such decentralization. I’m not the first one to say this. Zhamak describes data mesh as a “decentralized sociotechnical approach.” I want to emphasize the social aspect of this description because you have to inflict changes at every level to reap the benefits of the concept of data as a product. But transformations are expensive and require leadership with tenacity, endurance, and foresight. These can be hard to come by.

Third, while the core principles of the data mesh, such as (1) domain-oriented decentralized data ownership and architecture, (2) data as a product, (3) self-service data infrastructure as a platform, and (4) federated computational governance, promise a better state for the analytical world, I don’t believe that there is a straight road ahead for all the reasons I describe above. Each of these four items requires significant shifts in tech, processes, roles, and responsibilities. I would march with eyes wide open, start from the first principles and be open to changing minds as we go.

Thanks to Debashis Saha for several discussions and feedback on this article. Also, thanks to Mammad Zadeh and Bala Natarajan for their perspectives and feedback.